Smartphone photography has come a long way since its inception, with the Apple iPhone playing a pivotal role in shaping this evolution. While the past few years have seen the iPhone’s camera lagging a bit behind its Android counterpart, the iPhone 16 Pro camera system has again placed Apple at the head of the table, setting new standards in mobile photography. At its heart, the iPhone 16 Pro appears to be donning the same triple-sensor setup as its predecessor; however, once you get hold of the device, you will be instantly hooked with drastic improvements.

Keep reading to learn how the iPhone 16 Pro redefines smartphone photography with its cutting-edge technological advancements.

Next-gen camera system on iPhone 16 Pro

The iPhone 16 features a 48MP main camera with a second-generation sensor that doubles data transfer speeds. This upgrade drastically reduces noise and enhances clarity, even in challenging low-light conditions. Complementing this is a 48MP ultra-wide camera for expansive landscape shots and a 12MP telephoto lens with 5x optical zoom on both the iPhone 16 Pro and iPhone 16 Pro Max, unlike the previous models, enabling sharp, detailed photos at a distance.

More pixels in the Ultra-Wide camera

A professional landscape photographer will adore the upgrade from the iPhone 15 Pro 12MP Ultra-Wide camera to a new 48MP Ultra Wide camera on the iPhone 16 Pro. While the iPhone 16 Pro has the same 13mm ultra-wide lens that offers a 120-degree field of view, the 12MP sensor on the 15 Pro was just 25% of the resolution of the 24mm (1x) lens. By introducing the new 48MP Ultra-Wide sensor, Apple ensures that you no longer have to sacrifice resolutions while capturing wider perspectives.

This is because the larger sensor captures more light, which in turn results in sharper, more detailed photos. This improvement enhances wide-angle shots, making landscapes and group photos look more vivid and lifelike. The wider aperture also ensures excellent low-light performance, reducing noise and improving overall image clarity.

All new 48MP Macro

The added bonus of the new 48MP Ultra-Wide camera is that the iPhone 16 Pro’s Macro mode also uses the same camera. Thus, the Macro shots are now 48MP as well. Every resulting Macro shot now has remarkable detail, opening up a whole new world of possibilities for capturing detailed images of tiny objects, from the intricate patterns on a butterfly’s wings to the delicate textures of a flower petal. The macro mode, combined with the phone’s AI-enhanced focusing capabilities, ensures that every shot is crisp and clear. This makes the iPhone 16 Pro the first choice for users who prefer macro photography.

The fastest access to a smartphone camera

The new Camera Control button is by far the most hyped feature of the iPhone 16 series lineup. You now get a dedicated DSLR-like capture button to access and control the camera. Yes, one could always use the Volume or Action button to trigger the shutter; the Camera Control triggers the shutter and can also control various aspects of the camera system, such as focus, exposure, and zoom settings. With many pro-grade third-party camera apps now available, this new inclusion almost bridges the gap between smartphones and professional cameras.

Personalized and natural images: Photographic Styles

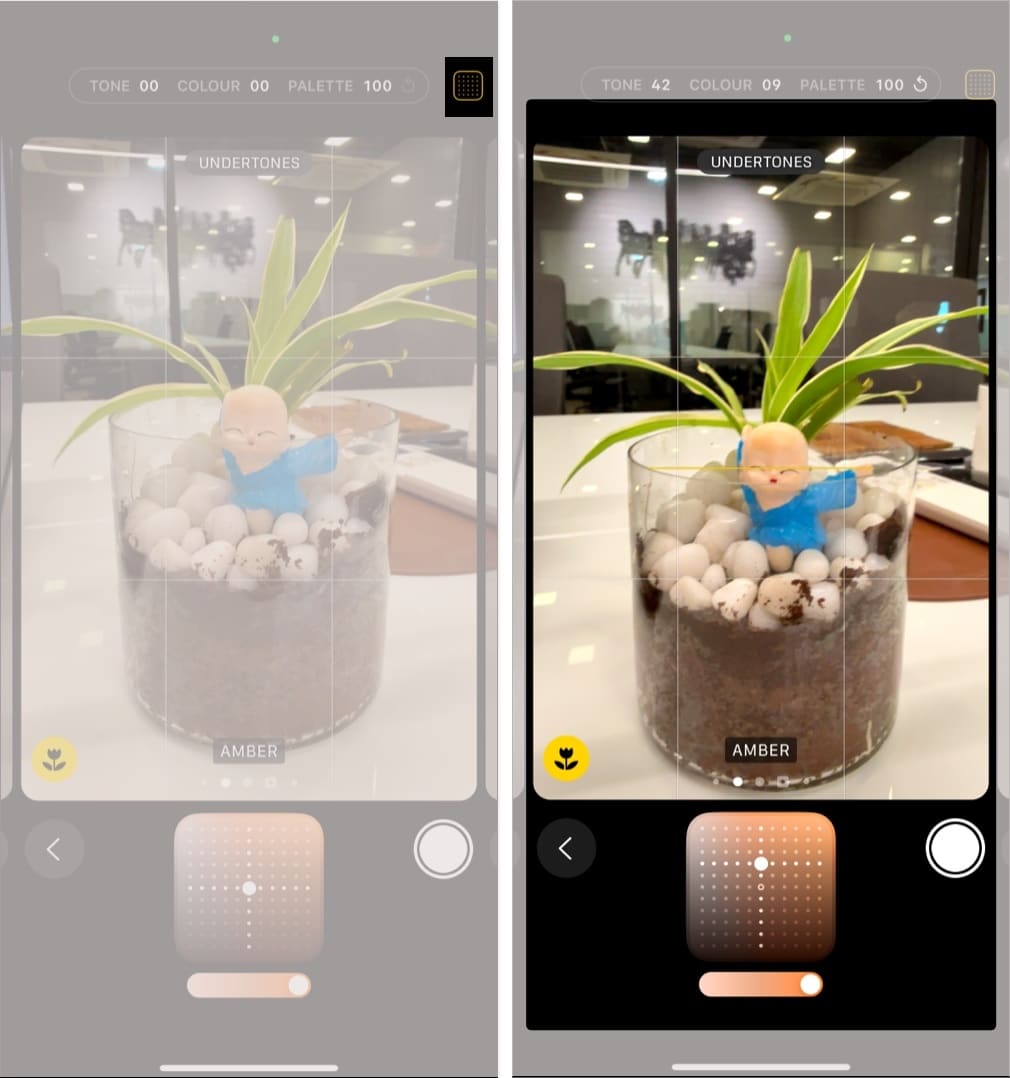

Why many users might think of Photographic Styles as just another form of filters and presets, but in reality, each style is much more. This is because, unlike presets or filters, Photographic Styles adapt to the subject in the frame differently in each photo, whether the subject is a person, pet, or simply landscape.

Become an iGeeksBlog Premium member and access our content ad-free with additional perks.

Any Photographic Style, when selected, applies an aesthetic to a scene based on the specific colors present in the photo, making it easier to achieve a dramatic look in your shots without the need for extensive editing. At present, Apple offers eight Photographic Styles, with the Rose, Neutral, Rose Gold and Amber options bringing out skin undertones, while the Vibrant, Luminous, Quiet and Dramatic options offer mood-based aesthetics.

The cherry on top is that you can customize each individual style by tweaking its tone and color, creating your own custom color and tone profile for your iPhone camera. This makes the iPhone 16 Pro camera the first smartphone system that can be individualized as per the user’s choice. All this gives you more control over your images, even if you have zero to no photography or photo editing competence.

Pro video capture and workflow

Thanks to the new A18 Pro chip, the iPhone 16 Pro has a new image signal processor and video encoder that supports 4K slow-motion video recording at 120fps in Dolby Vision. But does this new inclusion mean anything to the non-professional daily video takers? Well, it does. You can now capture more excellent footage even in less-than-ideal light conditions.

Although the Sony Xperia 5 II already had the 4K 120fps video shooting capability two years ago, unlike the iPhone 16 Pro, the Xperia 5 II requires the user to switch to a special Cinematic camera app to record the footage, which is a tedious task in itself. Not to mention, no other smartphone camera can do too well in not-so-good lighting conditions, which is exactly where the iPhone 16 Pro’s pro-slow res excels.

While the iPhone was always miles ahead of others in terms of video recording capabilities, capturing high-quality audio along with the video has always been challenging when you are out in a windy environment. Apple, with the iPhone 16 Pro, has made a big leap in audio performance by adding four studio-quality mics for high-quality audio recording. The new mic setup lowers the noise floor, giving you more true-to-life sounds.

Not only this, but the new Audio Mix option lets you adjust how you want the voices to be heard in your video after you capture it. There are three options: In-Frame, Studio, and Cinematic.

The bottom line

With the iPhone 16 Pro, Apple has once again made steady progress in enhancing the camera experience, which was something missing in previous generations. All the new additions to the iPhone 16 Pro camera system, be it advanced hardware or intuitive software enhancements, maximize its ability to capture professional-quality images and videos, even in challenging conditions. This makes it a must-have for creators and enthusiasts alike.

So, what do you think of the new camera system on the iPhone 16 Pro? Do share your thoughts in the comments.

Read more: